Brief wake-up call about false negatives.

Previous note: we recommend that you have this song on hand for this article: What I Am (Edie Brickell & The New Bohemians, 1988)

It Is said that an Arabian proverb reads:

He who knows, and knows that he knows, is Wise; Follow him. He who knows not, and knows that he knows not, is a student; Teach him. He who knows, and knows not that he knows, is asleep; Wake him. He who knows not, and knows not that he knows not, is a fool; shun him.

Many of you may already know it. In this article I invite you to play with the idea of an internal predictor, an idea of who we are and how we look. The intention is to freely explore the meaning of what is predicted, and the possible classification errors. we will not go into numerical quantification details.

Before seeing the example, taking the risk of repeating for those who already know, let’s review some concepts:

• A predictor is a model or algorithm that allows us, based on information we have (input variables, or features), some result that depends on them (output variable, or label). This result can be a classification label (label) or a numerical object. In the first case we typically talk about classifiers, and in the second case, of regressors. A predictor is as obvious and random as it is throwing a coin, where the success of the yes/no answer will be 50%.

• In order to evaluate how good our predictor is, we need to classify the results obtained against reality. In the simplest case, we want to predict whether a certain property is met (Positive) or not (Negative). That is, our output variable would be a binary label. Then we talk about:

o Positives: they are the objects that the predictor affirms that will fulfill the property that we want to predict.

o Negatives: are the objects that, according to the predictor, will not fulfill the property.

When we contrast the results generated by the predictor against reality we talk about:

o True: the objects that really fulfill the property of interest

o False: the objects that don’t fulfill the property of interest.

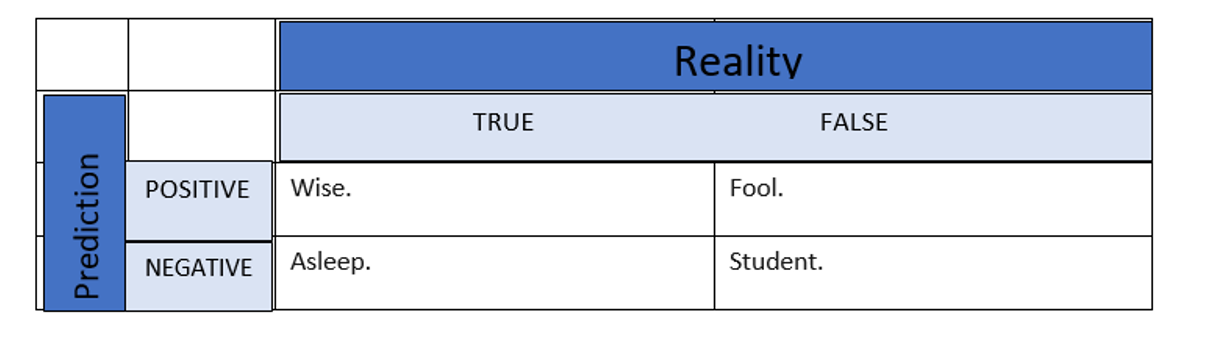

We are now in a position to contrast the predicted (Positive, Negative) against reality (True, False). This is what we call confusion matrix, a common topic in machine learning.

Returning to the Arab proverb, and now is a good time to play the song by Edie Brickel, imagine that each individual would like to predict his wisdom, reduced to the binary problem of “knowing or not knowing”. The Positive label, or True class, will be “knows.” So we have the following confusion matrix:

According to the proverb, we can assign an interpretation to each situation, obtaining the following table:

In statistics, the false positive is also known as a false alarm, or type I error. The false negative, type II error.

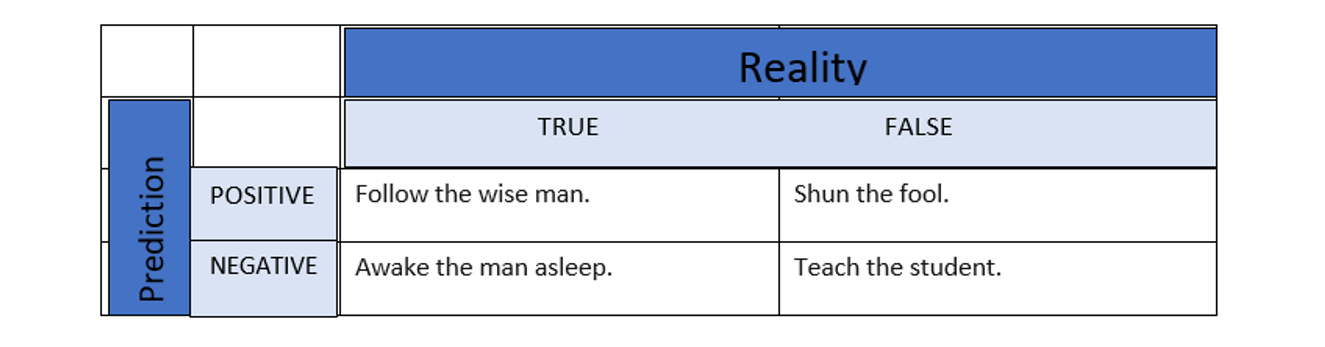

Finally, and following the proverb, we can attribute a decision to each interpretation:

Here we can make several statements according to the inference directives and the set of rules suggested by the text:

• True positives are examples to follow. They are the best thing that can happen to us in terms of precision (accuracy) and coverage (recall).

• False positives, in addition to degrading the accuracy of the predictor, should be avoided.

• True negatives are not aware of their potential but they are identified, they must be helped.

We could continue philosophizing. For that, I invite you to listen to Filosofía Barata y Zapatos de Goma, by Charly García (1990).

Beyond fables, to know if a predictor or classifier works well, the fundamental measures will be precision and coverage – which we can appreciate by seeing the confusion matrix – or elaborations on them.

In reality, it maps business cases in terms of ratios or probabilities of events. Some examples of these probabilities, taken from cases in which we had to work, are:

• fraud situation for a given transaction

• that a customer soon becomes a debtor

• that considering my shopping history I find an item interesting

• that a disease diagnosis is configure by symptomatology

• that a customer stops being a customer

• the security of identifying an object of a particular class in an image

How to weigh accuracy and coverage, since one always affects the other, will depend on the business case. Also, choose security threshold with which we want to consider a prediction.

Fernando López Bello @fer_lopezbello

Computing Engineer and Big Data Specialist