ETHIC, HEALTH and AI

– A robot may not injure a human being or, through inaction, allow a human being to come to harm.

– A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

– A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Robotics Manual, 56th edition, year 2058.

In the 50s, in many of his novels, Isaac Asimov was already showing concern for the moral decisions’ robots could make. In the page previous to the introduction of the book “I, Robot” he enlists his three famous robotics laws, mentioned before.

Nowadays, where technology has a growing and daily use, it must be taken into account that its use can provide excellent benefits, but it can also cause great damage. The impact of technology should be analyzed from several points of view such as: social, human and nature.

Ethic

Since ancient times, ethics has been subject of discussion and importance. Provided it is free to act, every human being has moral. Morality is understood as the rules or habits to follow to behave properly. Ethics studies human behavior and gives foundation to Morality. Moral responds to the “what should I do”, Ethics responds to the “why.”

There are indications that in prehistoric times moral rules were already used, more related to survival issues. At the time of the first great kingdoms (Assyrians, Egyptians, etc.), morals had more to do with what the gods commanded (eg: the Ten Commandments) with what would serve the rulers (pharaohs, kings, etc).

In ancient Greece, which also had its set of gods that had a lot of characteristics in common with humans, ethics and morals were “rationalized” by philosophers and were distanced from religion and rulers. Socrates related virtue to knowledge “let us make men wise and they will be good.” Aristotle said that ethics was “the good life.” The Greeks thought that happiness could only be achieved if one had a virtuous life.

It is in said context that Hippocrates wrote, one of his many contributions to medicine, the “Hippocratic oath” (25 centuries ago).

Hereunder, I write some of the commands that are part of this oath:

- Put all the effort and intelligence in the practice of the profession.

- Avoid wrong-doings and injustices.

- Practice the profession with innocence and purity.

- Do good in the house of the sick.

- Teach medicine.

- Keep secret of what is seen or heard.

Since its been written said oath has been used in many different regions of the planet. It is interesting to observe that already at that time, the issue of confidentiality of sensitive data was addressed (keep secret of what is seen or heard).

In the Geneva Declaration in 1948, the World Medical Association (WMA) updated the oath and then in 2017 they did it again (see here).

In the 19th century the term Deontology emerges as a new way of calling ethics. However, as time passed it was redefined as the ethics applied to the profession. Deontology is currently considered the branch of ethics that deals with the duties that govern professional activities and duties related to the exercise of a profession. All professions or occupations can have their own deontology that indicates the duty of each individual.

“Responsibility gap” of the Artificial Intelligence

Returning to what I mentioned before, computer applications are of vital importance in multiple areas of society. This means that its possible for IT professionals to do “good things” or cause harm, allow or influence others to do good or to cause harm, without even being aware of it. Taking into account that the applications of artificial intelligence are not only software artifacts that perform certain tasks, but also learn, with the possibility of “teaching themselves” based on experience and run based on that new knowledge, the risk of them “escaping” the control of their own creators is generated.

David Gunkel, robotics and ethics philosopher of the Northern Illinois University said: “We’re now at a point where we have AI that are not directly programmed.” “They develop their own decision patterns.”

“Who is able to answer for something going right or wrong?”

Gunkel calls this dilemma the “responsibility gap” of artificial intelligence.

In this way, the dilemma generated is who is responsible for actions that certain applications of artificial intelligence make. As an example, we can mention the problem generated with Microsoft’s Tay AI, a chatbot launched in 2016. It was designed to interact with people on social media and “trained” (learned) with the posts and responses of the participants. But it happened that many participants began to feed the social network with racist, sexist and other unwanted content. As a consequence, Tay became a racist and sexist chatbot, and had to be deactivated (learn more here).

Due to the rapid development of artificial and robotic intelligence in recent years, it is becoming increasingly necessary to define new ethical standards related to the software industry that constitute a globally recognized framework, with the aim of preserving the rights and freedom of human beings, without stopping technological development. Some questions that arise are: is it possible that a machine can make a moral decision? Isn’t ethics an essentially human quality?

Generating moral standards related to AI

Careful efforts are being made to face this dilemma; such is the case of the European Union that has developed a set of seven ethical standards that companies should apply to their artificial intelligence developments.

- Human agency and oversight: artificial intelligence systems must allow equitable societies supporting human action and fundamental rights and not diminish, limit or divert human autonomy.

- Technical robustness and safety: trustworthy AI requires algorithms to be secure, reliable and robust enough to deal with errors or inconsistencies during all life-cycle phases of an AI system.

- Privacy and data governance: citizens should have full control over their own data, and their data should not be used to harm or discriminate against them.

- Transparency: traceability of AI systems must be guaranteed.

- Diversity, non-discrimination and fairness: AI systems must consider the whole range of human abilities, skills and requirements, and ensure accessibility.

- Societal and environmental well-being: AI systems should be used to enhance positive social change and improve sustainability and environmental responsibility.

- Accountability: mechanisms should be put in place to ensure responsibility and accountability for AI systems and their outcomes.

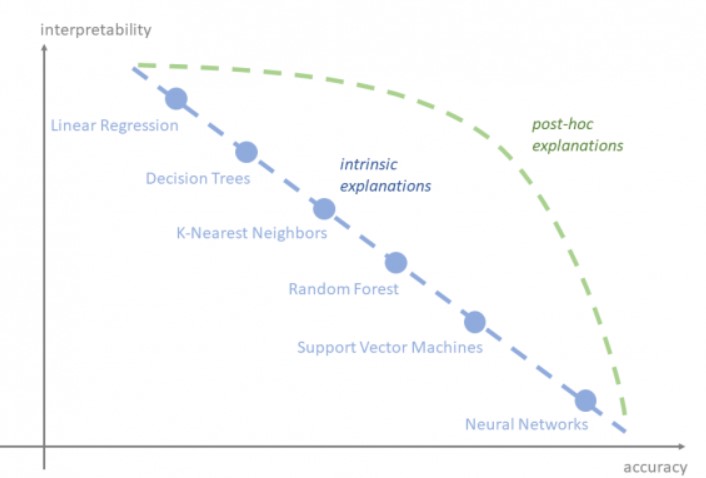

To enable a model, particularly in health, it is critical to achieve its explainability (why) and interpretability or traceability (how), to understand the path that leads to a conclusion based on machine learning. Thus, today we speak of the Right to Explanation, in terms of GDPR.

This chart shows several artificial intelligence techniques, in relation to the interpretability and accuracy of the predictions.

In an interview given by Uruguayan AI specialist Pablo Sprechmann to fm Del Sol, he said that the DeepMind company he works for defines in his statutes not working for military projects. I found it an interesting example of a private initiative regarding AI & Ethics.

Rules and laws for Health Data Management

Next, I will mention other examples oriented towards data management.

In 2016, the WMA (World Medical Association) Declaration of Taipei of Ethical Considerations of Health Databases and Biobanks was written (learn more here)

In our country, the implementation of the National Electronic Health Record (NEHR) was carried out accompanied by laws such as the following:

- Law 18.331 “Law on protection of personal data” passed in 2008. Protects “sensitive” data of people (in Europe they use the GDRP regulation).

- Law 18.335: regulates the rights and obligations of patients and users of health services with respect to health workers and health care services. It includes aspects related to the use of the EHR (Electronic Health Record) and NEHR.

In the USA, the HIPPA (Health Insurance Portability and Accountability Ac) regulations are used, which regulates, among other aspects, the confidentiality of data related to Health.

Reality is changing

Heraclitus, in the 11th century BC, already proclaimed the constant change in which the world is plunged and therefore human beings. That also happened with the characters of Asimov. The 3 laws of robotics that he initially formulated and used in several of his novels, represented the moral code that robots should respect. But, in the future of his novels, they seemed to be insufficient for the invention to “dominate” the inventor …

And it is then that the ” law zero of robotics” appears for the first time in Isaac Asimov’s novel “Robots and Empire” (1985): “A robot may not harm humanity, or through inaction allow humanity to come to harm.”

Business platform consultant

PS: I thank Fernando López for giving a previous reading to this blog when it was still a draft and giving me some suggestions. Also, I thank Edgardo Noya for his expertise in relation to Asimov's bibliography whom I could consult about it.